Ouroboros Phalanx - Breaking Grinding Incentives

Abstract

We propose an extension to Ouroboros Praos, called Ouroboros Phalanx. The name derives from the Phalanx, an Ancient Greek military formation where soldiers stood in tightly packed units, shielding one another to form a nearly impenetrable defense. Just as the phalanx multiplied the strength of individual soldiers through coordination, this protocol enhances Cardano’s consensus by reinforcing its resistance to adversarial attacks.

At its core, Phalanx Protocol strengthens the VRF-based randomness generation sub-protocol that underpins leader election. It introduces an additional cryptographic primitive that is lightweight for honest participants yet computationally expensive for adversaries seeking to bias slot leader distributions. This design does not eliminate grinding attacks outright but makes them economically infeasible at scale.

By addressing both CPS-0021: Randomness Manipulation and CPS-0017: Settlement Speed, Phalanx achieves two goals simultaneously:

- It raises the cost of grinding attacks by a factor of roughly 1010.

- It reduces settlement time by approximately 20–30% compared to unmodified Praos, without compromising security.

Ouroboros Phalanx therefore represents a complementary advancement: reinforcing Cardano’s consensus security while improving performance, and ensuring the network remains robust against future adversarial strategies.

Motivation: why is this CIP necessary?

This proposal strengthens Cardano’s consensus protocol (Ouroboros Praos) against a class of attacks known as grinding attacks. These attacks allow adversaries to bias the randomness used in block leader elections in their favor, statistically slowing down settlement times and thys weakening the effectivness of Praos.

The improvement introduces an additional computation step that is lightweight for honest participants but significantly more expensive for attackers, making grinding attacks economically infeasible.

Recommended Configuration

As an initial configuration, we recommend 12 hours of cumulative and distributed execution of this cryptographic primitive per epoch on standard CPU architectures.

- The epoch is divided into 1-hour intervals.

- The first leader of each interval must produce the corresponding proof.

- For any individual node, this requirement represents roughly 527 seconds (≈10 minutes) of computation.

The algorithm is designed so that with 128-bit confidence, all required proofs will be produced on time by the end of each epoch.

Security Improvements

The proposal increases substantially the computational cost of a grinding attack by a factor of approximately 1010 compared to the current situation.

To maintain this level of security over time:

- Governance may choose to increase the 12-hour budget as the cost of computation decreases.

- Execution could migrate to ASIC-based architectures, preserving the same budget while maintaining security guarantees, and later increasing the budget further.

Beyond parameter updates, adoption of this proposal would necessarily require a hard fork, since it modifies the consensus protocol in two fundamental ways:

- The randomness for slot distribution is extended from 1 epoch to 2 epochs. At the start of epoch e, the snapshot of the stake distribution will be taken at the end of epoch e−2, rather than at the end of epoch e−1 as in Praos today.

- The general method of generating slot leader distributions is changed, making leader election more resilient to adversarial bias.

Consensus Performance

This proposal is not only about security, but also about consensus performance.

In Praos, because grinding allows adversaries to choose among multiple possible slot leader distributions, the probability of “bad events” (such as rollbacks or settlement failures) is statistically amplified compared to the honest model.

- If a bad event occurs with probability under unbiased randomness,

- An adversary able to try independent randomness candidates can increase the likelihood of that event up to (by the union bound).

This translates into slower settlement and weaker guarantees for the network as a whole. By substantially reducing compared to Praos, we limit the impact of grinding attacks and therefore improve settlement. In fact, the recommended configuration reduces settlement time by approximately 20–30% while maintaining equivalent security.

Relationship to Peras

Ouroboros Peras is a recent extension of Praos designed to accelerate settlement.

- Instead of waiting for the traditional 2160-block window (around 5 days) to guarantee finality, Peras introduces stake-weighted voting and certified blocks.

- Randomly chosen committees of stake pool operators can “vote” on blocks, and when enough votes are collected, the block receives a certificate.

- Certified blocks are treated as more important in the chain, which enables settlement in just 1–2 minutes.

Peras is fully compatible with Praos:

- When enough committee members participate, it achieves rapid settlement.

- When they do not (e.g., if too many operators are offline), Peras gracefully falls back to Praos. Peras would only fall back to Praos if there were massive network disruption or an attack by a 25% adversary.

In these fallback situations, the network still relies on Praos’ guarantees—precisely where Phalanx becomes relevant as a complementary defense layer. Phalanx ensures that even when Peras cannot certify blocks, the protocol still benefits from:

- Stronger protection against grinding attacks, and

- Faster settlement compared to unmodified Praos.

Together, they form a complementary pair:

- Peras provides speed when conditions are favorable.

- Phalanx ensures resilience and strong security guarantees in all cases.

Technical Depth

The remainder of this document provides the full technical specification for node implementors and researchers. Because Cardano’s security is grounded in cryptography, probability, and statistical guarantees, understanding the full details of this proposal requires technical knowledge in these areas. The complete specification is therefore dense: it describes mathematical models, cryptographic primitives, and rigorous proofs to ensure the system can be trusted at scale. Readers interested only in the high-level motivation and community impact may stop here.

Please refer to the CPD "Ouroboros Randomness Generation Sub-Protocol – The Coin-Flipping Problem" for a detailed understanding of randomness generation, leader election in Praos, and the coin-flipping dilemma in consensus protocols. Moving forward, we will dive into the core details, assuming you have the relevant background to understand the proposal.

Specification

The core principle of the proposed protocol change is to substantially escalate the computational cost of each grinding attempt for an adversary. To achieve this, every honest participant is required to perform a designated computation for each block they produce over an epoch (432,000 slots - 5 days) - note that this computation can be preprocessed locally at the beginning of the epoch. Consequently, an adversary attempting a grinding attack must recompute these operations for every single attempt, while being constrained by the grinding window, which dramatically increases the resource expenditure. By enforcing this computational burden, we drastically reduce the feasible number of grinding attempts an adversary with a fixed resource budget can execute, making randomness manipulation more expensive and significantly less practical.

1. High-Level Overview

1.1. Changes Relative to Praos

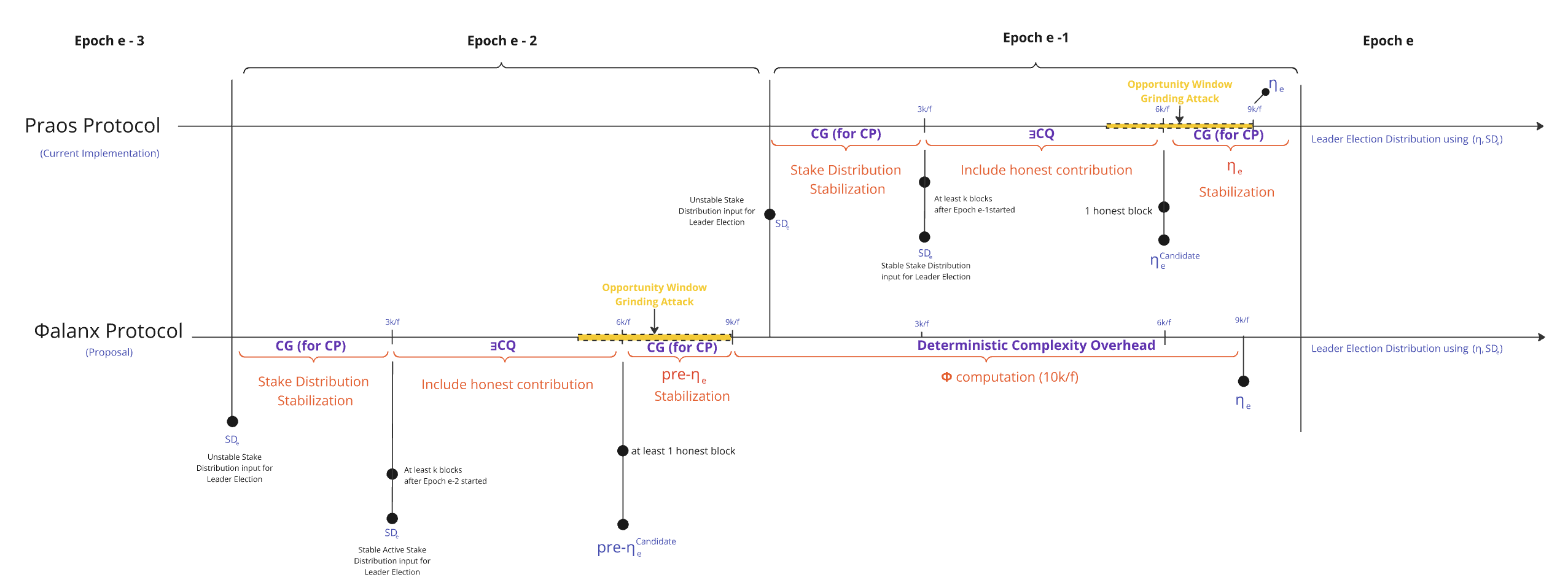

In Phalanx , the randomness generation and leader election flows are modified as follows:

- The stake distribution stabilization phase is shifted back by one epoch : The active stake distribution used for leader election is now derived from the end of instead of as in the original Praos protocol.

- The honest contribution inclusion phase, which originally resulted in a ηₑ candidate, is also shifted back by one epoch, aligning with the adjusted stake distribution stabilization. This value is now referred to as the pre-ηₑ candidate, signifying its role as an intermediate randomness nonce in the sub-protocol.

- The pre-ηₑ candidate, once stabilized (after ), undergoes a sequence of incremental operations using a new deterministic cryptographic primitive Φ (Phi). This sequence spans a full epoch size, specifically during the interval:.

- The final ηₑ (eta nonce), resulting from the Φ computation, completely determined by the prior stabilized pre-seed pre-ηₑ, does not need stabilization and is availablea a whole slots before the start of .

1.2. Inputs & Outputs

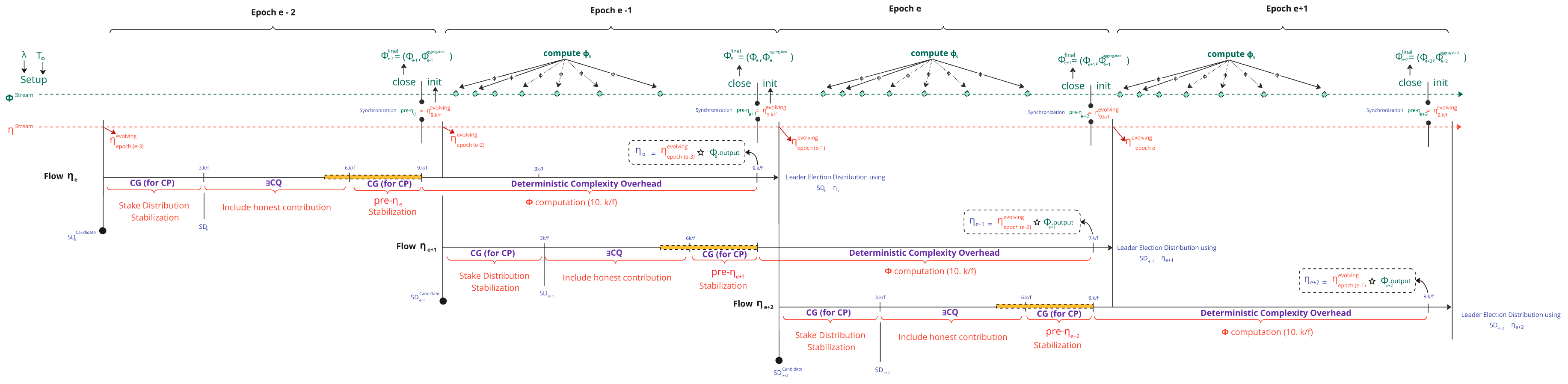

The Randomness Generation sub-protocol pipelines two parallel streams η stream and Φ Stream, which synchronize at at each epoch :

1.2.1. The η stream

- Already present in Praos and retained in Phalanx

- Updated with every block produced in the blockchain tree, a η stream captures intermediate values in the block headers, defined as follows:

| where | |

|---|---|

| The evolving nonce is initialized using the extraEntropy field defined in the protocol parameters. | |

| The VRF output generated by the Leader and included in the block header | |

| The concatenation of and , followed by a BLAKE2b-256 hash computation. |

1.2.2. The pre-ηₑ Synchronizations

- To generate for epoch , the stream Φ Stream is reset with the value of η stream at at

- This specific value of η stream is referred to as and defined as :

1.2.3. The Φ Stream

1.2.3.1. The Setup

The stream is bootstrapped by calling the parametrize function of the cryptographic primitive with:

where :

- is a security parameter for the cryptographic primitive .

- , a time-bound parameter representing the required computation duration, independent of available computing power.

- Any change to these 2 parameters would require a decision through Cardano governance.

- will contain derived configuration specific to the algorithm and the cryptographic primitive used.

1.2.3.2. The Lifecycle

It is reset at every pre-ηₑ synchronization point every slots :

At each slot , update the stream state by :

A node must be able to determine, based on the current state, whether it should begin computing iterations in order to provide a proof at its next scheduled leader slot (see Section "3.2.4.1. VDF integration" for details):

A node must be able to compute a specific chunk of the iterations independently of any global state. The result is an attested output—a pair where :

- is the computed output for iteration ,

- is a cryptographic proof attesting that was correctly derived from the input according to the rules of .

- Since this operation may be long-lived, intermediate attested outputs should be persistable to disk, allowing the node to stop, resume, or cancel computation from the latest completed sub-computation.

A subset of block-producing slots must include in their block bodies a unique attested output with denoting the iteration index within the computation :

- Each attested output updates the stream state as follows:

- Each attested output must be verifiable both:

- logically, to ensure it corresponds to the correct slot and index, and

- cryptographically, to confirm that the computation was effectively executed

At the synchronization point , the stream is closed providing with the last attested output along with an aggregated proof . This aggregated proof fastens node synchronisation by allowing nodes to efficiently verify the final result without checking each individual produced during the computation phase :

1.2.4. The η Generations

- This is the final nonce used to determine participant eligibility during epoch .

- It originates from the operation with at synchronization and η stream and the combination of the outputs using an aggregation function .

2. The Φ Cryptographic Primitive

2.1. Expected Properties

The Φ cryptographic primitive is a critical component of the Phalanx protocol, designed to increase the computational cost of grinding attacks while remaining efficient for honest participants. To achieve this, Φ must adhere to a set of well-defined properties that ensure its security, efficiency, and practical usability within the Cardano ecosystem. These properties are outlined in the table below :

| Property | Description |

|---|---|

| Functionality | Must be a well-defined mathematical function, ensuring a unique output for each given input (unlike proof-of-work, which allows multiple valid outputs). |

| Determinism | Must be fully deterministic, with the output entirely determined by the input, eliminating non-deterministic variations. |

| Efficient Verification | Must allow for fast and lightweight verification, enabling rapid validation of outputs with minimal computational overhead. |

| Compact Representation | Input and output sizes should be small enough to fit within a block, optimizing on-chain storage efficiency. Further reductions are desirable where feasible. |

| Lower Bound on Computation | Computational cost of evaluation should be well-characterized and predictable, with a lower bound that is difficult to surpass, ensuring adversaries cannot gain an unfair efficiency advantage. |

| Ease of Implementation & Maintenance | Should be simple to implement and maintain, ensuring long-term usability and minimizing technical debt. |

| Adaptive Security | Function and its parameters should be easily reconfigurable to accommodate evolving threats, such as advances in computational power or new cryptographic attacks. |

2.2. Verifiable Delayed Functions (VDF)

Verifiable Delayed Functions (VDFs) are cryptographic primitives designed to take a certain amount of time to compute, regardless of how much computing resources are available. This delay is enforced by requiring a specific number of sequential steps that cannot be easily sped up through parallel processing. Once the computation is done, the result, , comes with a proof, , that can be checked quickly and efficiently by anyone. Importantly, for a given input, the output is always the same (deterministic function), ensuring consistency. They usually rely on repeatedly squaring numbers in a mathematical setting that prevents shortcuts and enables quick verification.

As one can see, VDFs present functionality, determinism, efficient verification and lower bound on computation. The compact representation depends on the chosen group as well as the instantiation, which we will tackle later on. The implementation and maintenance is straightforward as the output of a VDF is a simple exponentiation of a group element, only the square operation is needed to be implemented to compute it. As for the proof, this depends on the precise VDF instantiation. Finally, the system is "adaptively secure" as we can set up a group with high security to be reused for a whole epoch or more, and set the number of squaring, also called difficulty, depending on how much computation we want the nodes to perform.

Verifiable Delayed Functions were introduced by Boneh et al. [6] where the authors suggest several sequential functions combined with the use of proof systems in the incrementally verifiable computation framework (IVC) for viable proof generation and fast verification. VDF variants revolve around two primary SNARK-free designs: one from Pietrzak [36] and the second from Wesolowski [35]. They differ in the proof design.

In Wesolowski’s paper, the proof is defined as where is the challenge, the difficulty and is a prime number found by hashing the VDF input and output together.

The proof is thus a single group element that can be computed in at most group operations and constant space, or time where the number is both the number of processors and space while the verification takes scalar multiplications in and two small exponentiations in the group . The proving time can further be optimized to group multiplications by reusing the evaluation intermediary results.

Wesolowski also presents aggregation and watermarking methods. The aggregation method does not consist in aggregating multiple proofs but computing a proof of several VDF challenges. This is done by batching all inputs and outputs together and creating a proof for this batched input. The watermarking is done by computing the VDF twice, once normally and another time on a combination of the challenger’s id and VDF input.

In Pietrzak’s paper, the proof is a tuple of group elements , of size logarithmic in , that can be computed in time and can be optimized to multiplications. The verification takes small exponentiations. Subsequent work on Pietrzak’s paper shows how VDFs challenges can be structured in a Merkle tree to get a proof of the whole tree.

We will choose Wesolowski design over Pietrzark because of its space efficiency and possibility to aggregate proofs.

Specialized hardware such as ASICs can be used to evaluate VDF output much faster, up to a factor 5 in Chia's VDF project while Ethereum considers a factor 10. This, while unfortunate, is not prohibitive in our context as we only consider the use of VDFs for their computational cost. An attacker would still require a substantial budget to perform an anti-grinding attack in addition to purchasing at scale the specialized hardware that is not inexpensive nor readily available (Chia' ASICs can be purchased on a case per case basis for $1,000). We can also note that any solution would still be affected by hardware, like in the case of proof of works and hash farms.

2.3. Wesolowski's VDF

2.3.1. VDF Primitives

To define Wesolowski VDF construction, we first introduce a series of hash functions: , which samples random integers of bits, , which samples a random integer from the set of the first prime numbers, and , which samples a random group element of the class group .

We define the interface of a Verifiable Delay Function as , and define its underlying functions based on class groups as follows:

- Takes as input a security parameter and a challenge discriminant . This challenge discriminant acts as a source of public entropy used to deterministically derive the group discriminant , which defines a group of unknown order along with its group operation . The use of a challenge ensures that the resulting group is unbiasable and unpredictable, preventing adversarial precomputation. We shall drop the group settings from further functions for readability. Internally, we expect the setup procedure to invoke the following sub-operations:

-

Given a challenge and a number of iterations , computes the output .

-

Given a challenge and output , computes the VDF proof as where is sampled from the first prime numbers.

-

Returns 1 if successfully attests that with overwhelming probability, that is if where and . Returns 0 otherwise.

2.3.2. VDF Aggregation Primitives

In this section, we present a mechanism for producing a Wesolowski VDF aggregation proof. This construction enables efficient synchronization for network participants and may play a central role in deriving the final epoch nonce . The aggregation mechanism has the following interface whose functions will be detailled afterwards. We assume that a class group has already been set up, by .

N.B. We are showing here the core algorithms for simplicity and readability. In practice, we may use further techniques, for instance using an arbitrary byte and the epoch's number as personalization tags to ensure domain separation.

At the beginning of each epoch, we initialize the VDF accumulators' state that will be used to generate the VDF aggregation proof using .

Initialize accumulators | |

|---|---|

| Input Parameters |

|

| Steps |

|

| Returned Output | — Input and output accumulators and initial aggregation nonce. |

Every time a VDF output is published on-chain, if no previous VDF outputs are missing, we shall update the accumulators' state using .

Update accumulators | |

|---|---|

| Input Parameters |

|

| Steps |

|

| Returned Output | — Updated accumulator's state. |

Once all VDF outputs have been generated and the accumulators updated, we can generate the VDF aggregation proof using .

Prove accumulators | |

|---|---|

| Input Parameters |

|

| Steps |

|

| Returned Output | — Aggregated proof. |

The VDF aggregation proof can then be efficiently be verified using .

Verify accumulators | |

|---|---|

| Input Parameters |

|

| Steps |

|

| Returned Output | — Verification bit. |

3. Φ Stream Specification

We previously outlined the purpose of the Phalanx sub-protocol and introduced the cryptographic primitive underpinning its security guarantees. In this section, we provide a precise technical specification of the protocol, focusing on how the iterations are distributed and how Wesolowski’s Verifiable Delay Function (VDF) is integrated into the process.

3.1. Distribution of Φ Iterations

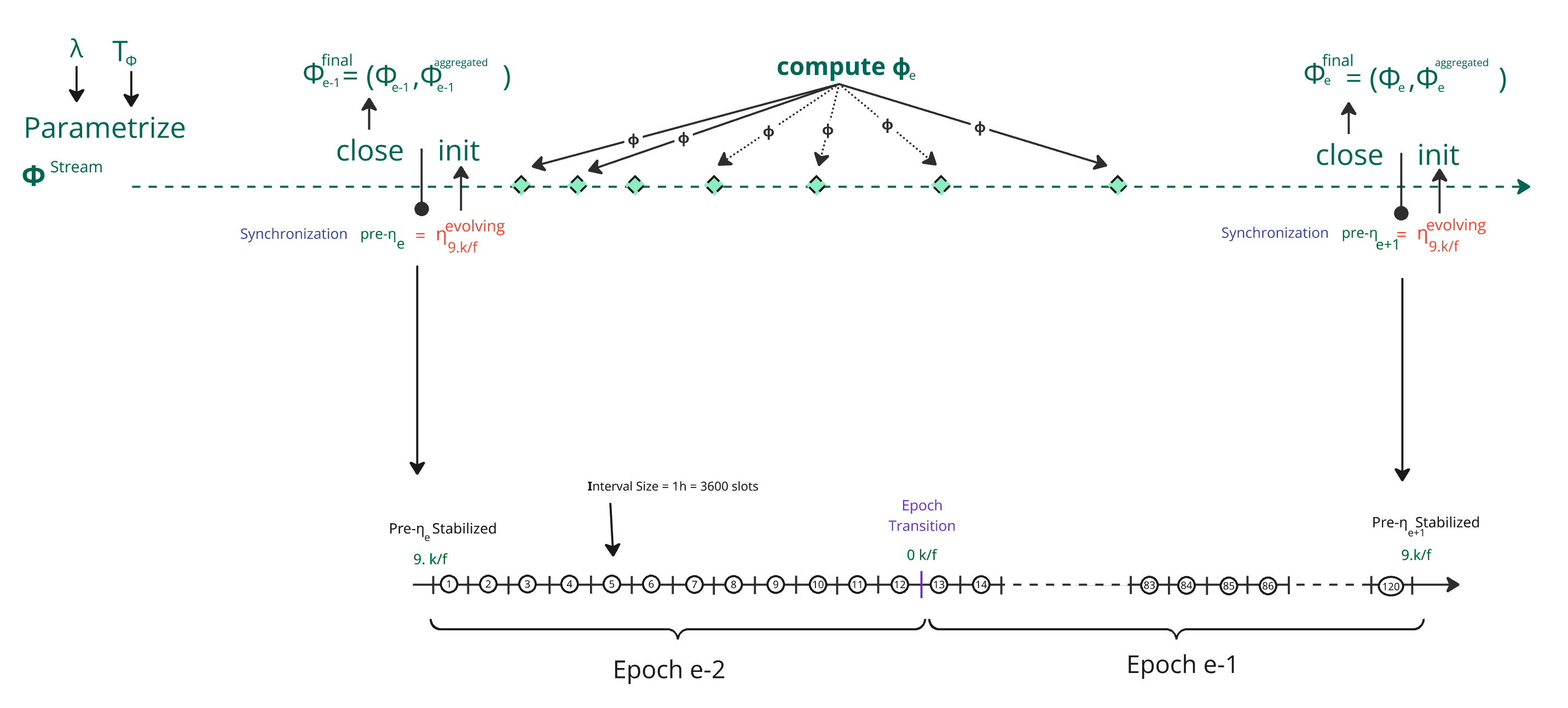

As previously mentioned, Φ Stream is divided into epoch-sized lifecycle segments. Each segment begins with an initialize function, ends with a close function, and is immediately followed by the start of a new segment.

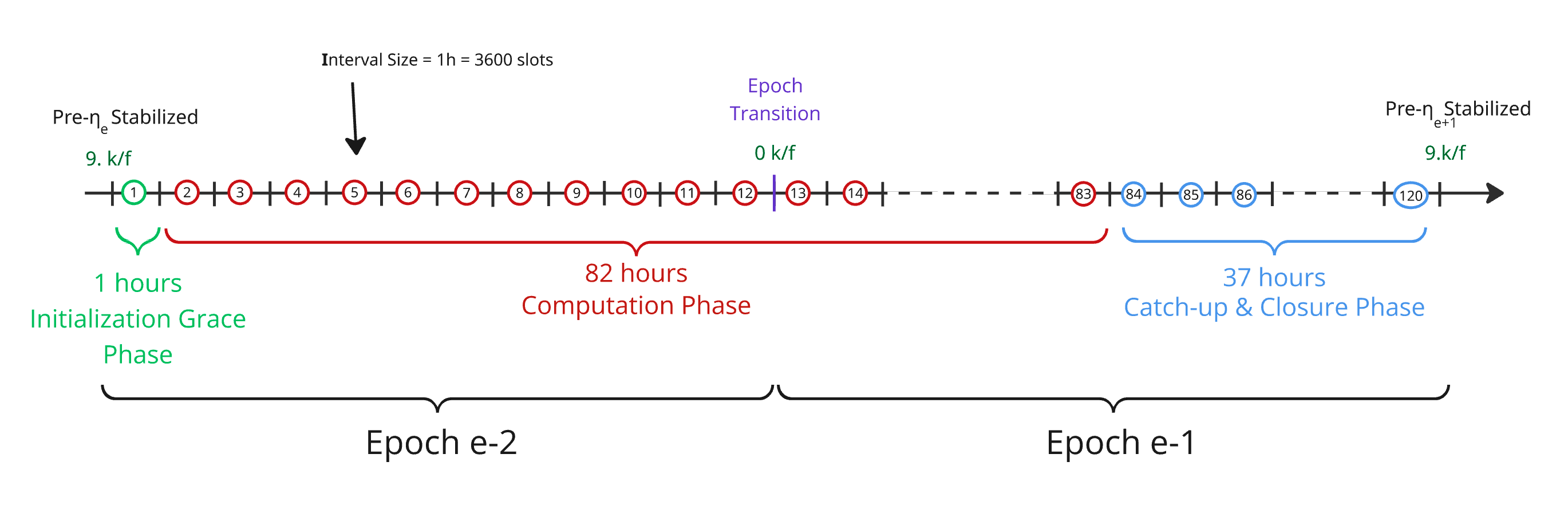

We further partition this segment into intervals, each large enough to guarantee (with 128-bit confidence) that at least one block will be produced within it. This corresponds to 3435 slots per interval. For simplicity, we round this to 3600 slots (~1 hour), resulting in exactly 120 intervals per segment, which conveniently aligns with the 120 hours in five days.

🔍 How 128-bit Confidence gives 3435 Slots ?

📦 Guaranteeing Honest Block Inclusion with 128-bit Confidence in our context

We want to make sure that, in any given interval of slots, there's at least one honest block produced — with a failure probability of at most (which is a standard for cryptographic security).

It is also important to note that we are operating in a context where fork-related concerns can be safely abstracted away. In particular, if an adversary were to attempt a private chain attack and succeed, it would imply that their chain is denser and that the proof of computation is valid. In this setting, forks do not undermine security—they actually improve the probability of having at least one valid computation published within the interval.

This means:

🎲 Step 1 — What’s the chance of not getting an honest block?

Each slot gives honest participants a chance to be selected as leader.

Let:

- → probability a slot is active

- → at least 51% of stake is honest

Then the chance that no honest party is selected in a slot is:

So, the chance that at least one honest party is selected in a slot is:

This means that each slot has a 2.584% chance of having an honest leader.

📐 Step 2 — What about across slots?

The chance that no honest block is produced in consecutive slots is:

We want this to be less than or equal to , so:

✏️ Step 3 — Solve for

Take log base 2 of both sides:

Now plug in:

To guarantee with 128-bit confidence that an interval contains at least one honest block, the interval must be at least 3435 slots long.

This ensures security even if up to 49% of stake is adversarial.

This structure can be illustrated as follows:

As previously described, we configure the stream using two key parameters, most notably the total computational budget . This value defines the total amount of work we require SPOs to collectively perform.

We split into discrete iterations, each with the following properties:

- Iterations are fully independent and can be computed in parallel.

- Slot leaders are responsible for submitting a proof of computation for the specific iteration assigned to them.

- These computations are fully decoupled, there is no requirement to wait for previous iterations, enabling input precomputation and reducing latency.

- All iterations must eventually be completed, and an additional and final iteration is used to aggregating all outputs along with a corresponding proof.

- The iterations are then used to compute the epoch randomness .

Each iteration is mapped to a specific interval, with the following constraints:

- The first interval is intentionally left without an assigned iteration, giving slot leaders time to compute the first output for interval #2 and allowing precomputation of the challenges.

- Each interval must be longer than the time required to compute a single iteration (i.e., the iteration duration must be less than one hour).

- The first slot leader to produce a block within an interval is responsible for submitting the corresponding attested output.

At first glance, we could divide evenly across the 120 intervals. However, to ensure resilience, the protocol must remain robust even in extreme scenarios—such as a global outage causing 36 hours of consecutive downtime (30% of an epoch). This scenario is detailed in the Cardano Disaster Recovery Plan.

A global outage implies a sequence of blockless intervals. To tolerate such conditions, the protocol must be able to handle up to 36 intervals without block production. To address this, we introduce a catch-up mechanism:

- We reserve the final 36 intervals of the segment specifically for recovering any missing attested outputs.

- These missing outputs must be submitted in order, according to their original indices, ensuring deterministic reconstruction of the full computation stream.

We define 4 sequential phases in the stream lifecycle:

-

🟧 Parametrization Phase : The stream is configured but not yet active. Parameters such as (computation hardness) and (number of iterations) are established during this phase.

-

🟩 Initialization Grace Phase: The stream is activated, and Stake Pool Operators (SPOs) are given a grace period to begin the first iteration of the computation.

-

🟥 Computation Phase: During this phase, the protocol expects attested outputs to be published on-chain. It consists of 82 computation iterations, each producing an intermediate output that contributes to the final result.

-

🟦 Catch-up & Closure Phase:

- A bounded recovery window that allows SPOs to submit any missing attested outputs, ensuring the completeness of the computation prior to finalization.

- A final dedicated interval to compute the aggregation of all previous outputs and derive the epoch’s final randomness . This phase seals the stream and concludes a lifecycle.

The diagram below illustrates how the lifecycle segment is structured:

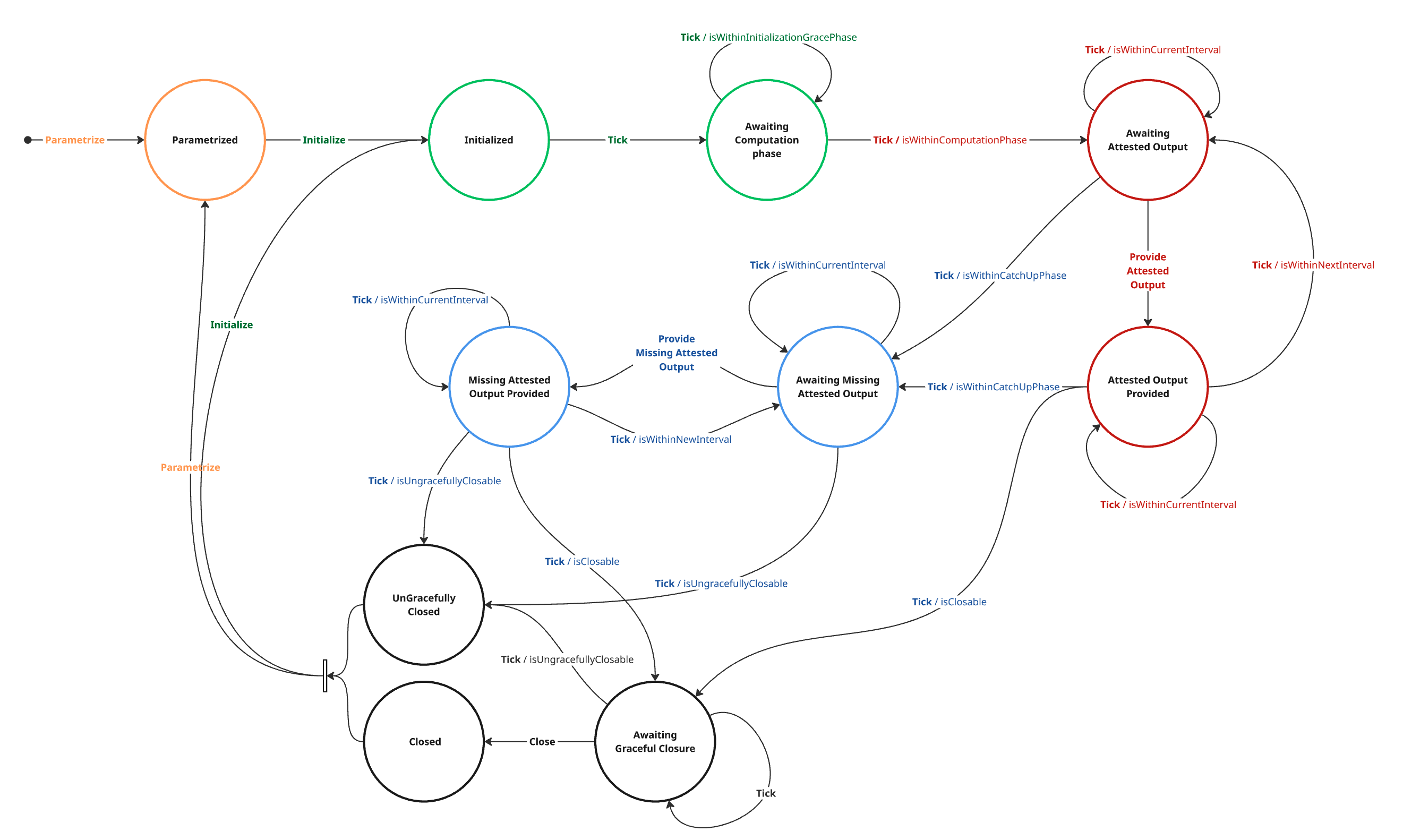

3.2. The State Machine

3.2.1. Diagram Overview

The figure below presents the state transition diagram for the Phalanx computation stream. Each node represents a distinct state in the lifecycle of the stream, and the arrows indicate transitions triggered by Tick events. These transitions are guarded by boolean predicates evaluated at each slot (e.g., isWithinComputationPhase, isWithinCurrentInterval).

In the following sections, we provide a detailed breakdown of each phase of the state machine, specifying its purpose, entry conditions, timing constraints, and transitions.

3.2.2. 🟧 Parametrization Phase

At the setup of , the total number of VDF iterations is derived from the time-bound parameter , using a reference hardware profile that reflects the minimal computational capacity expected of SPOs. While this derivation may not be fully automatable in practice, we include it here to clarify how time constraints are mapped to iteration counts during configuration.

Importantly, this parametrization phase occurs only once, either during the initial bootstrap of the stream or following a transition from the Closed to Initialized state.

Parametrized | |

|---|---|

| Fields |

|

parametrize | |

|---|---|

| Input Parameters |

|

| Derivation Logic | |

| Returned State |

3.2.3. 🟩 Initialization Grace Phase

Initialization occurs at every pre-ηₑ synchronization point, followed by an Initialization Grace period during which the protocol waits long enough for the first iteration to be computed and its proof to be included within the first computation interval. This process recurs every slots.

3.2.3.1. Initialize Command

We show here how to initialize the class-group based VDF algorithm when generating a group for each different epoch. Were we to use the same group for many, if not all, epochs, we would run these steps in the Parametrization phase and change the discriminant seed accordingly, e.g. if we use the same group forever we could use .

Initialized | |

|---|---|

| Fields |

|

initialize | |

|---|---|

| Input Parameters |

|

| Derivation Logic | |

| Returned State |

3.2.3.2. Tick Commands & Grace Period

AwaitingComputationPhase | |

|---|---|

| Fields |

|

Initial tick transition to AwaitingComputationPhase:

tick | |

|---|---|

| Input Parameters |

|

| Returned State |

Subsequent ticks on AwaitingComputationPhase:

tick | |

|---|---|

| Input Parameters |

|

| Returned State |

3.2.4. 🟥 Computation Phase

3.2.4.1. VDF integration

We are now entering the Computation Phase. We have waited long enough for the first slot leader within the initial interval to have the opportunity to produce a block and submit the corresponding attested output. However, because the slot distribution is privately visible, leaders within the interval cannot determine whether they are the first to produce a block.

Each leader is free to adopt their own strategy for deciding whether to initiate the proof of computation. A simple and conservative approach is to wait until , where is a small constant. At that point, the leader may begin computing. If a block has already been produced by then, the leader can either skip the computation or abort it if already in progress. This delay increases the chances that any earlier eligible leaders have already submitted their outputs, thereby minimizing the risk of redundant proofs.

To publish the first block of interval of epoch , the node invokes:

This function internally calls the VDF primitives: and with inputs constructed as:

- The parameters and are retrieved from the

Initializedstate.

Finally, the node includes the attested outputs in the block header:

- : the VDF output for interval

- : the corresponding VDF proof for interval

In rare cases, an interval may produce no block, and consequently, no expected proof for the corresponding iteration. The computation phase simply acknowledges these gaps; they are handled during the subsequent Catch-up Phase, which is specifically designed to resolve such missing outputs.

3.2.4.2. The States

During the computation phase, the stream alternates between two closely related states: AwaitingAttestedOutput and AttestedOutputProvided. These two states are structurally identical, meaning they share the same underlying fields. What distinguishes them is their semantic role in the protocol’s lifecycle:

AwaitingAttestedOutputrepresents the period before an attestation has been submitted for the current interval.AttestedOutputProvidedsignals that the attestation for the current interval has been successfully received and verified.

The field structure for both is as follows:

| Field | Description |

|---|---|

| Reference to the prior initialization state. | |

| The current slot in the timeline. | |

|

The AttestedOutputProvided state reuses the exact same structure:

This version aligns both the field names and their types in two neat columns. Let me know if you'd prefer the braces to be placed differently (e.g. outside the alignment block) for aesthetic reasons.

| Field | Description |

|---|---|

| Reference to the prior initialization state. | |

| The current slot in the timeline. | |

|

3.2.4.3. ProvideAttestedOutput & Tick Commands

The provideAttestedOutput command is used to submit a new attested output for a specific interval , when the protocol is in the AwaitingAttestedOutput state. This function verifies the validity of the provided proof and updates the stream state accordingly :

provideAttestedOutput | |

|---|---|

| Input Parameters |

|

| Property Check |

|

| Returned State | — Updated state reflecting the verified attestation. |

Once an attested output has been provided, the next slot may trigger a tick event. If no further action is taken, the system must determine whether it remains within the current interval, moves to the next, or enters the catch-up phase. The following command captures this logic when starting from the AttestedOutputProvided state :

tick | |

|---|---|

| Input Parameters |

|

| Returned State |

Alternatively, when still waiting for an attestation and no block was produced, a tick may trigger a transition based on the current time. This command applies to the AwaitingAttestedOutput state before any attestation has been submitted :

tick | |

|---|---|

| Input Parameters |

|

| Returned State |

isClosable indicates that all attested outputs have been successfully provided, and only the final interval remains, during which the outputs are aggregated and the seed is derived and recorded on-chain.

3.2.5. 🟦 Catch-up Phase

This Catch-up Phase closely resembles the preceding Computation Phase, but its purpose is to recover from any blockless intervals that may have occurred — albeit such cases are extremely rare.

The phase spans a total of 37 intervals: 36 are reserved to account for up to 36 consecutive intervals without block production (e.g., a global outage affecting 30% of an epoch), and 1 final interval is allocated for the Closure Phase. As in the Computation Phase, missing attested outputs must be submitted in order, one per interval.

The faster these missing outputs are provided, the sooner the state machine can transition to the final phase. Although the protocol allocates 37 intervals to handle the worst-case scenario, recovery may complete much earlier in practice.

This section focuses solely on the Catch-up Phase; the next section will describe the process of stream closure.

3.2.5.1. The States

Structurally, we define two states that are similar in form and semantics to AwaitingMissingAttestedOutput and AttestedMissingOutputProvided:

This phase focuses on recovering the missing attested outputs—specifically, the None entries in the attestedOutputs array. The goal during this phase is to have those missing values provided.This phase operates under strict sequential expectations where the missing attested outputs must be provided in order, one per interval, as in the Computation Phase. To make this explicit, we define the sequence of expected indices as follows:

This ordered set defines the exact sequence in which the missing attestations must be submitted during the Catch-up Phase.

3.2.5.2. ProvideMissingAttestedOutput & Tick Commands

The provideMissingAttestedOutput command is used to submit a missing attested output for a specific interval , when the protocol is in the AwaitingMissingAttestedOutput state. This function checks the validity of the proof and updates the stream state accordingly:

provideMissingAttestedOutput | |

|---|---|

| Input Parameters |

|

| Property Check |

|

| Returned State | — Updated state reflecting the accepted missing output. |

Once a missing attested output has been provided, the next slot may trigger a tick event. The system must determine whether it remains within the current interval, moves to the next, or enters the closure phase. The following command captures this logic when starting from the MissingAttestedOutputProvided state :

tick | |

|---|---|

| Input Parameters |

|

| Returned State |

Alternatively, when still waiting for an attestation and no block was produced, a tick may trigger a transition based on the current time. This command applies to the AwaitingMissingAttestedOutput state before any attestation has been submitted :

tick | |

|---|---|

| Input Parameters |

|

| Returned State |

isUngracefullyClosable indicates that the end of the lifecycle segment has been reached (i.e., currentSlot++ == 0), while some attested outputs are still missing. When this condition holds, the lifecycle is forcefully closed in an ungraceful manner.

3.2.6 ⬛ Closure Phase

We now enter the final phase of the lifecycle, during which all collected outputs are expected to be aggregated and recorded on-chain, and the seed derived and committed.

Successful Scenarios: In these cases, all attested outputs have been provided by the end of the catch-up phase.

- Best-case scenario: The closure phase begins at interval 84, giving the system 37 intervals to perform output aggregation and seed commitment under normal operating conditions.

- Worst-case scenario: The catch-up mechanism is fully utilized, and the system enters the closure phase at interval 120, the very last interval of the lifecycle segment. Even so, all necessary outputs have been successfully provided.

Failure Scenario:

This occurs when the lifecycle segment reaches its end (i.e., the full slots), and despite the entire duration of the catch-up mechanism (up to interval 120), either some required attested outputs remain missing, or all outputs have been delivered but the final aggregation has not occurred.

This scenario represents an extremely rare event—statistically far beyond 128-bit confidence—and reflects a severe disruption in which no blocks have been produced for over 36 hours. These edge cases are represented in the diagram by the transition Tick / isUngracefullyClosable.

3.2.6.1. The States

In this phase, we define two states:

AwaitingGracefulClosure: This state signifies that all 82 attested outputs have been successfully collected. At this point, the outputs are no longer optional—each index is populated with a verified pair .

Closed: This is a final state in the stream lifecycle. It signifies that the aggregated output has been computed and verified, and the final epoch randomness $\eta_e$ has been successfully derived—achieving the core objective of the protocol. This state is reached in response to either aClosecommand :

UngracefullyClosed: This is a terminal state in the stream lifecycle. It indicates that either not all expected attested outputs were provided, or the aggregated output could not be computed. As a result, is returned as the final value of . Statistically, this state is highly unlikely to occur, but it is explicitly handled for completeness and structural consistency of the state machine. The transition to this state is triggered byTickin combination with theisUngracefullyClosablecondition.

3.2.6.2. The Successful Scenario: The Close Command

At this stage, the system is in the AwaitingGracefulClosure state. All necessary data has been collected, and a block can now be produced within the remaining time before the end of the stream lifecycle (as previously discussed, this could occur at the 84th or 120th interval, depending on how smoothly the lifecycle progressed).

In this scenario, the first block producer within the remaining intervals must include the following values in the block body:

- : The aggregated output of the computation, representing the final result and its corresponding proof.

- : The final objective of the protocol—a 256-bit epoch randomness beacon, which will be used to seed leader election in the next epoch.

These values complete the stream and trigger the transition to the Closed state.

Close | |

|---|---|

| Input Parameters |

|

| Property Check |

|

| Epoch Randomness | — Apply the SHA-256 hash function to . |

| Returned State | — Final state embedding the verified computation and the derived epoch randomness. |

3.2.6.3. tick Command

The tick command handles time progression within the AwaitingGracefulClosure state:

-

isUngracefullyClosableis true when the stream reaches the end of its lifecycle segment (i.e., ) and some attested outputs are still missing. When this condition holds, the system transitions to theUngracefullyClosedstate. -

Otherwise, the command simply increments the

currentSlotfield to reflect time advancement.

tick | |

|---|---|

| Input Parameters |

|

| Returned State |

isUngracefullyClosable indicates that the end of the lifecycle segment has been reached (i.e., currentSlot++ == 0), while some attested outputs are still missing. When this condition holds, the lifecycle is forcefully closed in an ungraceful manner. Otherwise we are just update the current slot field

3.2.6.4. The Failure Scenario: Ungraceful Closure

If the protocol reaches the end of its lifecycle, or it becomes evident that too many consecutive blockless intervals have occurred—such that a valid can no longer be derived—the Tick signal triggers the isUngracefullyClosable condition. This causes a transition to the terminal state UngracefullyClosed, in which the precomputed value is adopted as the official .

While this state is statistically unexpected, it is explicitly defined to ensure completeness and structural consistency within the state machine.

4. Recommended Parameter values

There are two categories of parameters in the Phalanx protocol that Cardano governance will need to oversee. The first category concerns the VDF parameters, which are essential to maintaining the protocol’s cryptographic security. The second concerns the total time budget that will be required from SPOs during the Computation Phase.

The goal of this section is to provide recommended values for these parameters along with the rationale behind their selection, so that governance is well-equipped to adjust them over time in response to advances in adversarial computational power.

4.1 VDF Security Parameters λ and ρ

The VDF component in Phalanx relies on class group security, which is parameterized by two values:

- , the class group security parameter, and

- , the grinding resistance parameter, newly introduced in the Phalanx design.

Several combinations of and are considered in the literature, depending on the desired level of paranoia or efficiency. Based on the recommendations from the paper Trustless Unknown-Order Groups, we highlight the following trade-offs:

-

A paranoid setting, , requires a group size of ~3400 bits.

-

A more realistic yet cautious setting, , brings the group size down to ~1900 bits, but requires discriminants of at least 3800 bits.

-

Based on those benchmarks and our needs, we opt for , which corresponds to:

- a discriminant size of 4096 bits,

- and a group size of 2048 bits.

This strikes a balance between long-term security and practical efficiency:

- On one hand, breaking the class group is considered harder than finding a collision in a 256-bit hash (our minimum security baseline).

- On the other hand, by following the paper’s recommendation and selecting a slightly lower , we can reduce the size of on-chain group elements while maintaining sufficient resistance against grinding.

Since Phalanx is designed to operate with a single class group instance “for the lifetime of the protocol” (reparametrization would require explicit governance intervention), this configuration ensures protocol simplicity, consistency, and operational predictability.

4.2 Time Budget Tᵩ and Derived T

In terms of efficiency, the section 1. How Phalanx Addresses CPS-21 – Ouroboros Randomness Manipulation in the Rationale part of this document illustrates, through three scenarios , , and , how different time budgets (2 hours, 12 hours, and 5 days, respectively) improve the protocol’s security guarantees against grinding attacks.

In terms of computational load, we recommend setting the time budget at a midpoint between minimal and maximal protocol capacity, corresponding to approximately 12 hours of execution on typical, CPU-based hardware as recommended for SPOs. However, this choice should ultimately be guided by settlement time performance requirements across the ecosystem, including the needs of partner chains and other dependent components.

4.2.1 Specialized ASIC vs CPU-Based Chips

We need to account for the possibility that adversaries may equip themselves with specialized ASIC chips optimized for computing Wesolowski’s Verifiable Delay Function (VDF). The Chia team has already developed and deployed such ASIC Timelords, which demonstrate between 3× and 5× performance improvements compared to standard CPU-based implementations. These ASICs reach up to 1,000,000 iterations per second, while commodity CPU Timelords typically max out around 260,000 IPS (Chia Network, Oct 2023).

To mitigate this performance asymmetry, our initial strategy is to require a 12-hour equivalent workload on standard CPU hardware (as in ), which is calibrated to provide the same security guarantees as a less aggressive configuration like . This gives us a conservative baseline for security, assuming an adversary might leverage ASIC acceleration.

Critically, scaling this kind of grinding capability is expensive. For an adversary to mount an effective grinding attack, they would need to build and operate a farm of VDF-optimized ASICs — a non-trivial financial and operational challenge. Chia’s rollout of these units has been tightly controlled and aimed at ensuring global distribution, not centralization (Chia Global Expansion Update, June 2024).

Mid-term, we propose encouraging — or potentially requiring — stake pool operators (SPOs) to adopt VDF ASIC hardware. This would ensure that honest participants remain competitive and are not systematically outperformed by well-resourced adversaries.

Long-term, our strategy involves standardizing the use of verifiable delay ASICs across the network, or alternatively, establishing a dynamic calibration process. This would allow iteration requirements to be periodically adjusted based on the evolving performance gap between commodity and specialized hardware, maintaining consistent and predictable security guarantees.

In summary, while ASIC-equipped adversaries could, in theory, gain a computational advantage during the grinding window, the cost and scale required to pose a real threat remains high. Our mitigation strategy is to raise the honest baseline to neutralize this advantage and prepare for possible hardware evolution over time.

4.2.1 Deriving from Tᵩ to T

We recommend a 12-hour computation budget on standard CPU-based machines, which we estimate to be 10× slower than specialized ASICs available to adversaries. This configuration corresponds to Phalanx1/10 in terms of time budget, while achieving Phalanx1/100 in terms of security guarantees against grinding attacks.

However, this time budget () is a high-level abstraction. To implement it concretely, we must derive the number of VDF iterations () required for each first block of an interval. Assuming 82 intervals per epoch, this translates to:

So, we ask for approximately 10 minutes of VDF computation per published interval block on standard CPU-based hardware.

To translate this into a concrete number of VDF iterations (), we rely on performance benchmarks from the implementation available in the repository rrtoledo/chiavdf. This library is a highly optimized and production-hardened implementation of Wesolowski's VDF, currently in use by the Chia blockchain. We have made minor, superficial modifications to this codebase solely to facilitate benchmarking and increase the discriminant size.

Thanks to its well-established performance profile, this implementation provides a dependable baseline for estimating how many iterations can be executed within a fixed time frame on standard CPU hardware. Under our test environment—an Ubuntu machine equipped with an Intel® Core™ i9-14900HX (32 cores, 64 GiB RAM)—we observed approximately 27 million iterations in a 10-minute window.

Note : We recommend that this benchmark be re-run on a dedicated, representative SPO machine to calibrate a more accurate production value for .

5. Efficiency analysis

5.1 Block Publication

To publish a block, a node must:

- Perform squarings to compute the output,

- Execute operations for the proof generation.

We now show benchmarks for evaluating and proving together VDFs, as well as individually, for different discriminant sizes done on a Ubuntu computer with Intel® Core™ i9-14900HX with 32 cores and 64.0 GiB RAM. For a 4,096 bit long discriminant, we perform around 45,000 iterations per second, and so evaluate and prove a VDF in 22.6s.

| Size Discriminant | #iterations | IPS | Evaluate and Prove (s) | σ proving | Eval (s) | σ proving | Prove (s) | σ proving |

|---|---|---|---|---|---|---|---|---|

| 256 | 10,000 | 637252 | 0.0187 | 0.000676 | 1.57E-02 (84%) | 0.000563 | 8.00E-03 (43%) | 0.000461 |

| 256 | 100,000 | 641349 | 0.172 | 0.00115 | 1.56E-01 (91%) | 0.00188 | 5.68E-02 (33%) | 0.00165 |

| 256 | 1,000,000 | 627336 | 1.72 | 0.0215 | 1.59E+00 (93%) | 0.0197 | 4.88E-01 (29%) | 0.00633 |

| 512 | 10,000 | 367449 | 0.0322 | 0.000635 | 2.72E-02 (85%) | 0.000648 | 1.31E-02 (41%) | 0.000204 |

| 512 | 100,000 | 378942 | 0.289 | 0.0029 | 2.64E-01 (92%) | 0.00283 | 8.76E-02 (31%) | 0.000893 |

| 512 | 1,000,000 | 378204 | 2.83 | 0.0287 | 2.64E+00 (94%) | 0.0279 | 7.29E-01 (26%) | 0.00873 |

| 1024 | 10,000 | 206186 | 0.0537 | 0.000902 | 4.85E-02 (91%) | 0.00076 | 2.00E-02 (38%) | 0.000364 |

| 1024 | 100,000 | 211921 | 0.503 | 0.00722 | 4.72E-01 (94%) | 0.00721 | 1.45E-01 (29%) | 0.00198 |

| 1024 | 1,000,000 | 213319 | 4.92 | 0.0506 | 4.69E+00 (96%) | 0.0475 | 1.20E+00 (25%) | 0.0136 |

| 2048 | 10,000 | 103135 | 0.105 | 0.000285 | 9.70E-02 (92%) | 0.000303 | 3.77E-02 (36%) | 0.000122 |

| 2048 | 100,000 | 105315 | 1.01 | 0.0165 | 9.50E-01 (94%) | 0.0123 | 2.78E-01 (28%) | 0.00516 |

| 2048 | 1,000,000 | 107038 | 9.75 | 0.0746 | 9.34E+00 (96%) | 0.0828 | 2.20E+00 (23%) | 0.0209 |

| 4096 | 10,000 | 44567.8 | 0.244 | 0.00463 | 2.24E-01 (92%) | 0.00454 | 8.30E-02 (35%) | 0.00168 |

| 4096 | 100,000 | 45848.6 | 2.31 | 0.0253 | 2.18E+00 (95%) | 0.0229 | 6.00E-01 (26%) | 0.0089 |

| 4096 | 1,000,000 | 46293.2 | 22.6 | 0.16 | 2.16E+01 (96%) | 0.148 | 4.79E+00 (22%) | 0.0422 |

5.2 Block Verification

5.2.1 When Not Syncing

To verify a VDF proof, a node performs:

- 2 hashes,

- 4 small exponentiations,

- 3 group multiplications.

Over an epoch with intervals, this results in:

- hashes,

- small exponentiations,

- group multiplications.

We now show verification benchmarks for discriminants of different sizes done on the same machine as before. For a 4,096 bit long discriminant, the verification takes around 15ms.

| Size Discriminant | #iterations | Verification (ms) | σ verification |

|---|---|---|---|

| 256 | 10,000 | 1.74E+00 (10%) | 0.0786 |

| 256 | 100,000 | 1.12E+00 (1%) | 0.0471 |

| 256 | 1,000,000 | 1.75E+00 (1%) | 0.151 |

| 512 | 10,000 | 3.25E+00 (11%) | 0.0562 |

| 512 | 100,000 | 1.89E+00 (1%) | 0.0268 |

| 512 | 1,000,000 | 2.11E+00 (1%) | 0.134 |

| 1024 | 10,000 | 3.66E+00 (7%) | 0.117 |

| 1024 | 100,000 | 3.21E+00 (1%) | 0.0467 |

| 1024 | 1,000,000 | 3.20E+00 (1%) | 0.135 |

| 2048 | 10,000 | 7.00E+00 (7%) | 0.0409 |

| 2048 | 100,000 | 9.33E+00 (1%) | 0.147 |

| 2048 | 1,000,000 | 6.40E+00 (1%) | 0.218 |

| 4096 | 10,000 | 1.58E+01 (7%) | 0.316 |

| 4096 | 100,000 | 1.47E+01 (1%) | 0.248 |

| 4096 | 1,000,000 | 1.37E+01 (1%) | 0.303 |

5.2.2 When Syncing

When synching, the nodes only need to update the accumulators and verify the final aggregation proof. As such, the node perform in total arounf half as less operations than verifying all proofs individually. More particularly, we have:

- hashes,

- small exponentiations.

- group multiplications,

Note: The exponentiations involving the values are half as expensive as those in the full proof verification.

For a discriminant of 4096 bits, we benchmarks the aggregation functions on the same machine as before. We can see that updating the accumulators in the aggregation indeed takes half time as much as verifying a single VDF proof, and verifying the aggregation is as cheap as a normal VDF proof and that proving the aggregation is more expensive than a VDF output, this is due to the absence of intermediary value found when evaluating the VDF input, but less expensive than evaluating a VDF.

| Size Discriminant | #iterations | (ms) | (s) | (ms) |

|---|---|---|---|---|

| 1,000 | 8.0E+00 | 2.3E-03 | 1.7E+01 | |

| 10,000 | 8.0E+00 | 3.0E-02 | 1.7E+01 | |

| 100,000 | 8.0E+00 | 3.0E+00 | 1.7E+01 | |

| 1,000,000 | 8.0E+00 | 3.1E+01 | 1.7E+01 |

6. CDDL Schema for the Ledger

To support Phalanx, one block per interval (every 3600 slots), across 83 intervals per epoch, must include 2 group elements. Each of these elements can be compressed to approximately bits. Based on our recommended discriminant size of 4096 bits:

- 3,104 bits (388 bytes) per element (the benchmarked library adds 4 bytes to each element),

- 6,208 bits (776 bytes) per block (2 elements),

- 515,264 bits (64,408 bytes ≈ 64.4 KB) per epoch (83 blocks).

Phalanx requires a single addition to the block body structure in the ledger: the field phalanx_challenge.

block =

[ header

, transaction_bodies : [* transaction_body]

, transaction_witness_sets : [* transaction_witness_set]

, auxiliary_data_set : {* transaction_index => auxiliary_data }

, invalid_transactions : [* transaction_index ]

+ , ? phalanx_challenge : vdf_attested_output

]The structure phalanx_challenge is defined as follows:

vdf_attested_output =

[ output : vdf_size

, proof : vdf_size

]

vdf_size = [ bytes, bytes .size 388 ]We initially evaluated including the phalanx_challenge (i.e., the VDF attested output) in the block header (instead as proposed in the block body) colocated with the VRF outputs. However, this approach raised concerns due to header size constraints.

The current maximum block header size in Cardano is 1100 bytes, although actual usage today averages around 860 bytes. Since TCP packet limits suggest keeping headers under 1500 bytes (1,460 without headers), the available headroom is approximately 600 bytes. The full vdf_attested_output in its default form requires:

- 388 bytes per group element (assuming the lowest acceptable security parameters)

- 2 group elements (output + proof)

- Total: 776 bytes

This would exceed the 1500-bytes limit, risking fragmentation and violating guidance from the Cardano networking team. We could safely decrease the group element size by decreasing the security parameters if we were to generate new class groups at each epoch. Doing so would however render the protocol more complex and potentially weaken the security of the protocol as we may have more chances to generate a weak class group.

Rationale: How does this CIP achieve its goals?

1. How Phalanx Addresses CPS-21 - Ouroboros Randomness Manipulation?

1.1 Problem Overview

CPS-0021 / Ouroboros Randomness Manipulation examines the Randomness Generation Sub-Protocol within Ouroboros Praos ⚙️, highlighting its vulnerabilities and their implications for Cardano’s security 🔒. Key insights include:

- Randomness Vulnerability: Ouroboros Praos employs VRFs for randomness generation, but this approach is susceptible to grinding attacks, where adversaries manipulate outcomes to influence leader election, threatening Cardano’s fairness ⚖️ and integrity.

- Attack Likelihood: Attacks become significantly more feasible when an adversary controls over 20% of the total stake (approximately 4.36 billion ADA, as of March 2025), while smaller stakes (e.g., 5%) make such attempts highly unlikely over extended periods.

- Economic Barrier: Gaining enough stake to execute an attack requires a substantial investment 💰—billions of USD for a 20% share—posing a financial risk, as a successful attack could devalue the asset and undermine network trust.

- Computational Feasibility: The feasibility of attacks varies widely based on the computational resources an adversary can deploy, becoming progressively more accessible as stake accumulates:

- Small-scale attacks, costing as little as ~$56, are easily achievable with minimal resources, such as a standard computer, making them a low-barrier threat that even individual actors could attempt.

- Large-scale attacks, costing up to ~$3.1 billion, require extensive computational infrastructure, such as large data centers with millions of CPUs or ASICs, placing them in a range from feasible for well-funded entities (e.g., corporations or nation-states) to nearly impractical for most adversaries due to the immense resource demands.

- The intensity of these attacks scales with stake: the more stake an adversary holds, the greater their influence over leader election, amplifying their ability to manipulate randomness. In a simplistic view, this can be likened to manipulating a -bits nonce—a value ranging from to — where higher stake progressively grants more control, potentially allowing full manipulation of the nonce at the upper limit.

- The wide cost disparity reflects how the complexity of the attack—such as the scope of the targeted time window and the depth of evaluation—drastically increases resource needs, acting as a natural deterrent for more ambitious manipulations.

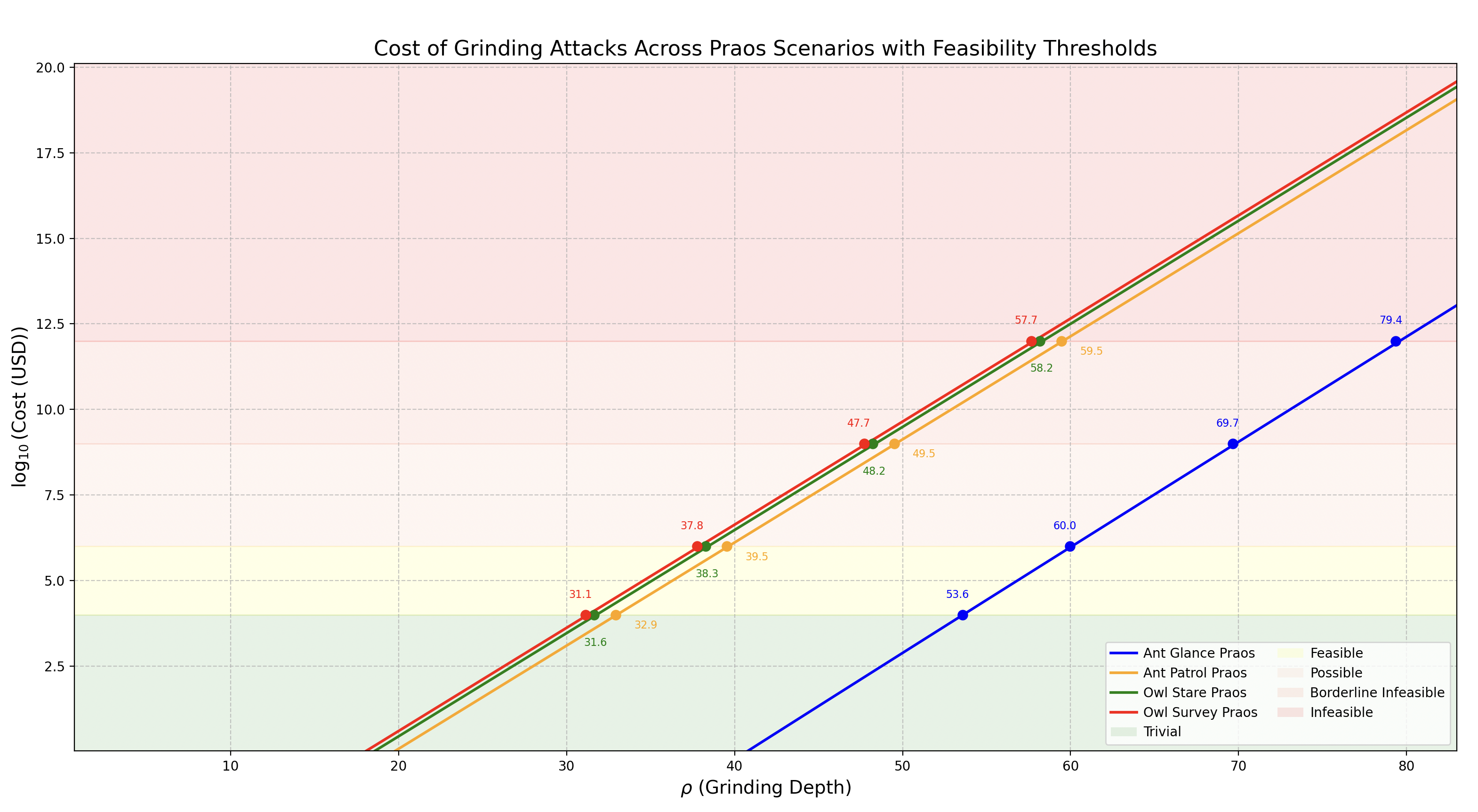

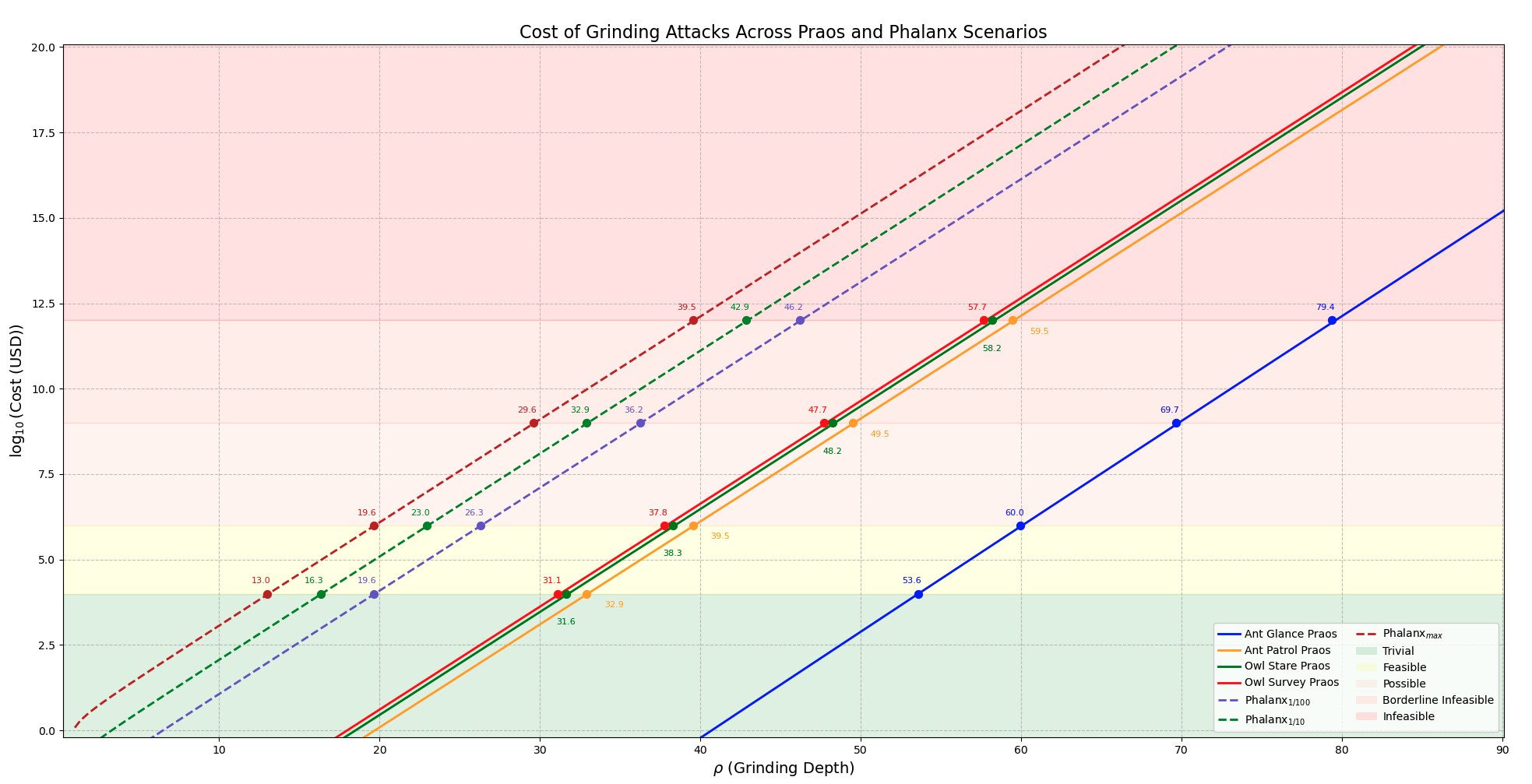

To illustrate the Computational Feasibility, the graph below (sourced from the CPD, Section 3. The Cost of Grinding: Adversarial Effort and Feasibility) maps attack feasibility across four scenarios—Ant Glance, Ant Patrol, Owl Stare, and Owl Survey—based on the nonce value (0 to 256 bits). Each scenario reflects different attack complexities, with feasibility shifting as computational and economic demands grow:

The table below delineates the values at which each scenario transitions across feasibility categories, illustrating the computational and economic thresholds:

| Feasibility Category | 🔵 Ant Glance | 🟠 Ant Patrol | 🟢 Owl Stare | 🔴 Owl Survey |

|---|---|---|---|---|

| 🟢 🌱 Trivial for Any Adversary | ||||

| 🟡 💰 Feasible with Standard Resources | ||||

| 🟠 🏭 Large-Scale Infrastructure Required | ||||

| 🔴 🚫 Borderline Infeasible | ||||

| 🔴 🚫 Infeasible |

Context: The scenarios represent increasing attack sophistication (e.g., Ant Glance is a quick, low-effort attack; Owl Survey is a comprehensive, resource-intensive one). As increases, so does the difficulty, shifting feasibility from trivial (e.g., a lone actor with a laptop) to infeasible (e.g., requiring nation-state-level resources).

These thresholds reveal critical vulnerabilities in Cardano’s current consensus design. Phalanx aims to mitigate these risks. In the following section, we revisit the core computational model, introduce the proposed enhancements, and quantify how they shift the feasibility landscape in favor of security.

1.2 Phalanx Cost Amplification per Grinding Attempt

In Phalanx, we introduce an additional parameter and computational cost, denoted , for each grinding attempt. This cost represents the total cumulative effort required to compute iterations of the primitive. This additional cost directly impacts the total estimated time per grinding attempt, as originally defined in CPD Section 3.3.4 - Total Estimated Time per Grinding Attempt. The baseline grinding time in Praos is:

With Phalanx, the total grinding time per attempt is updated to include :

Where:

- is the VRF evaluation time,

- is the eligibility check time,

- is the time for the hashing operation,

- is the target window size (seconds),

- is the grinding depth,

- is the nonce selection and evaluation time (attack-specific).

- is the additional computational cost of Phalanx

The introduction of substantially increases the computational burden for adversaries, as they must recompute the function for each of the possible nonces evaluated during a grinding attack. In contrast, for honest participants, this computation is distributed across the epoch, ensuring it remains manageable and efficient.

1.3 Phalanx Cost Amplification per Grinding Attack

Building on the updated grinding time formula introduced in the previous section, which incorporates the additional computational cost , we can now revise the formula for a grinding attack from CPD Section 3.4.1 - Formula, where we defined a total attack time that must fit within the grinding opportunity window :

which leads to the lower bound on computational power () :

1.3.1 Formula

Expanding

From Section 1.1, the per-attempt grinding time under Phalanx is:

Substituting this into the inequality:

Expanding in Terms of and

The grinding opportunity window is:

Assuming worst-case upper bound and noting , we substitute:

Bounding :

Rewriting:

Approximating :

Simplified:

Or grouped as:

1.3.2 Estimated Formula Using Mainnet Cardano Parameters

Starting from the final expression at the end of the last section:

Using Cardano’s mainnet values:

- seconds (1 microsecond) – Time to evaluate a Verifiable Random Function.

- seconds (0.01 microseconds) – Time for a BLAKE2b-256 hash operation.

- – Active slot coefficient.

- Slot duration = 1 second.

Since the eligibility check is negligible, set $T_{\text{eligibility}} \approx 0$:

Substitute into the expression:

-

First term:

-

Second term:

The estimated number of CPUs required is:

1.3.3 Impact of Tᵩ on Canonical Scenarios

Now that we have an updated formula, we can evaluate how Phalanx directly affects the cost of grinding attempts when compared to the original CPD scenarios. As previously discussed, the goal is to strike a balance between the effort expected from honest SPOs during an epoch and the computational burden imposed on an adversary attempting to evaluate multiple candidates in preparation for an attack.

To anchor this analysis, we introduce a baseline configuration denoted as : an overhead equal to 1/100 of an epoch, corresponding to slots. This represents a modest but meaningful choice — substantial enough to raise the adversary’s cost significantly, yet conservative enough to avoid overloading honest participants. In contrast, imposing a full-epoch overhead would likely be excessive in practice, potentially destabilizing the protocol or placing undue demands on block producers. We may refer to that upper bound as , and the present section aims to explore and recommend a viable configuration somewhere between this maximum and our conservative baseline.

Since each slot lasts 1 second, the overhead equates to 4,320 seconds, or exactly 1 hour and 12 minutes.

We now revisit the canonical scenarios from CPD Section 3.5 – Scenarios, and extend each one with a Phalanx-enhanced variant that incorporates this fixed computational cost: . The resulting formulas are derived by substituting each scenario’s respective values for and into the base expression from Section 1.2.2, now augmented with the constant Phalanx term .

The table below summarizes the expressions for each scenario:

| Scenario | ||

|---|---|---|

| Ant Glance | ||

| Ant Patrol | ||

| Owl Stare | ||

| Owl Survey |

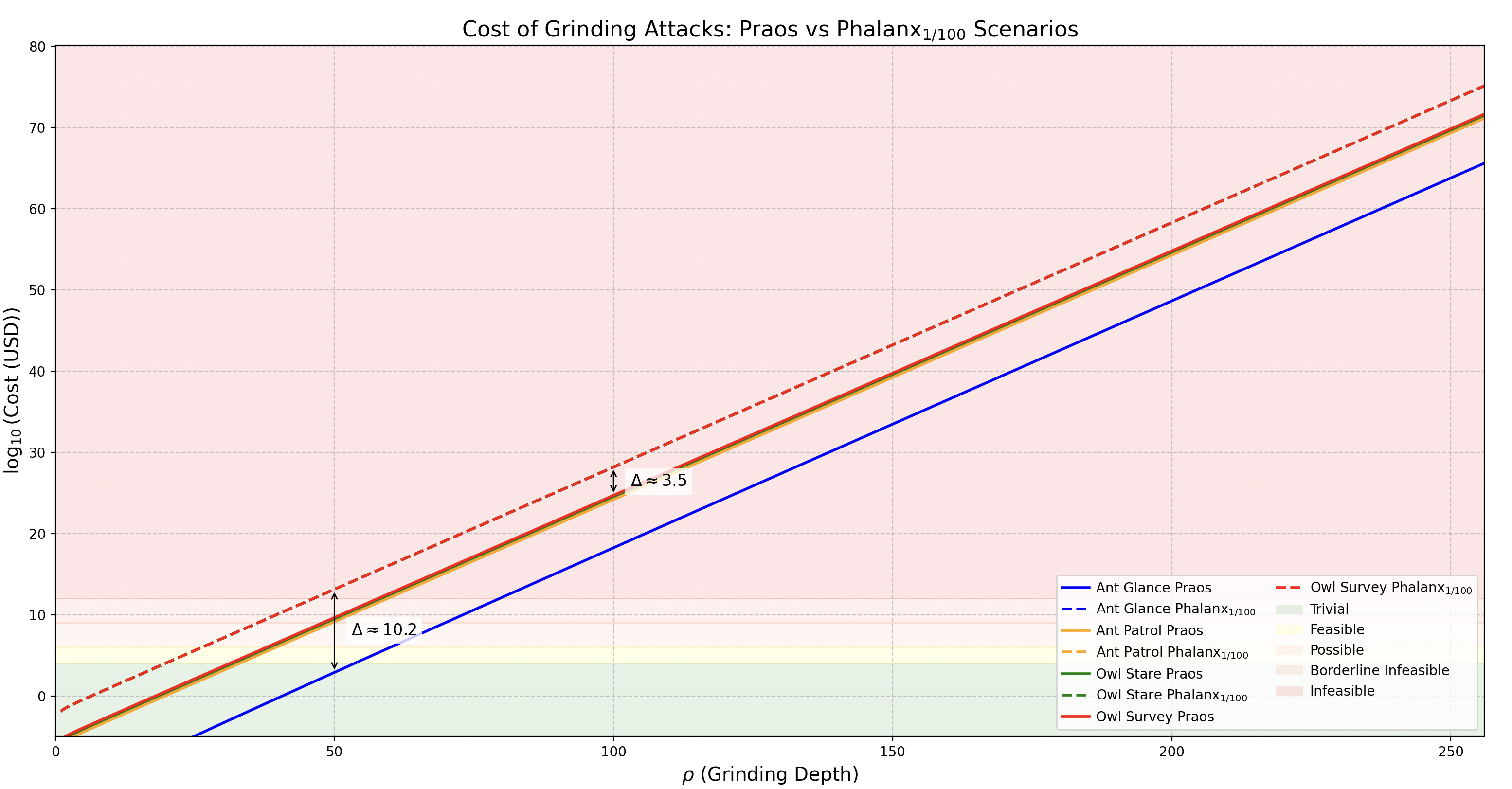

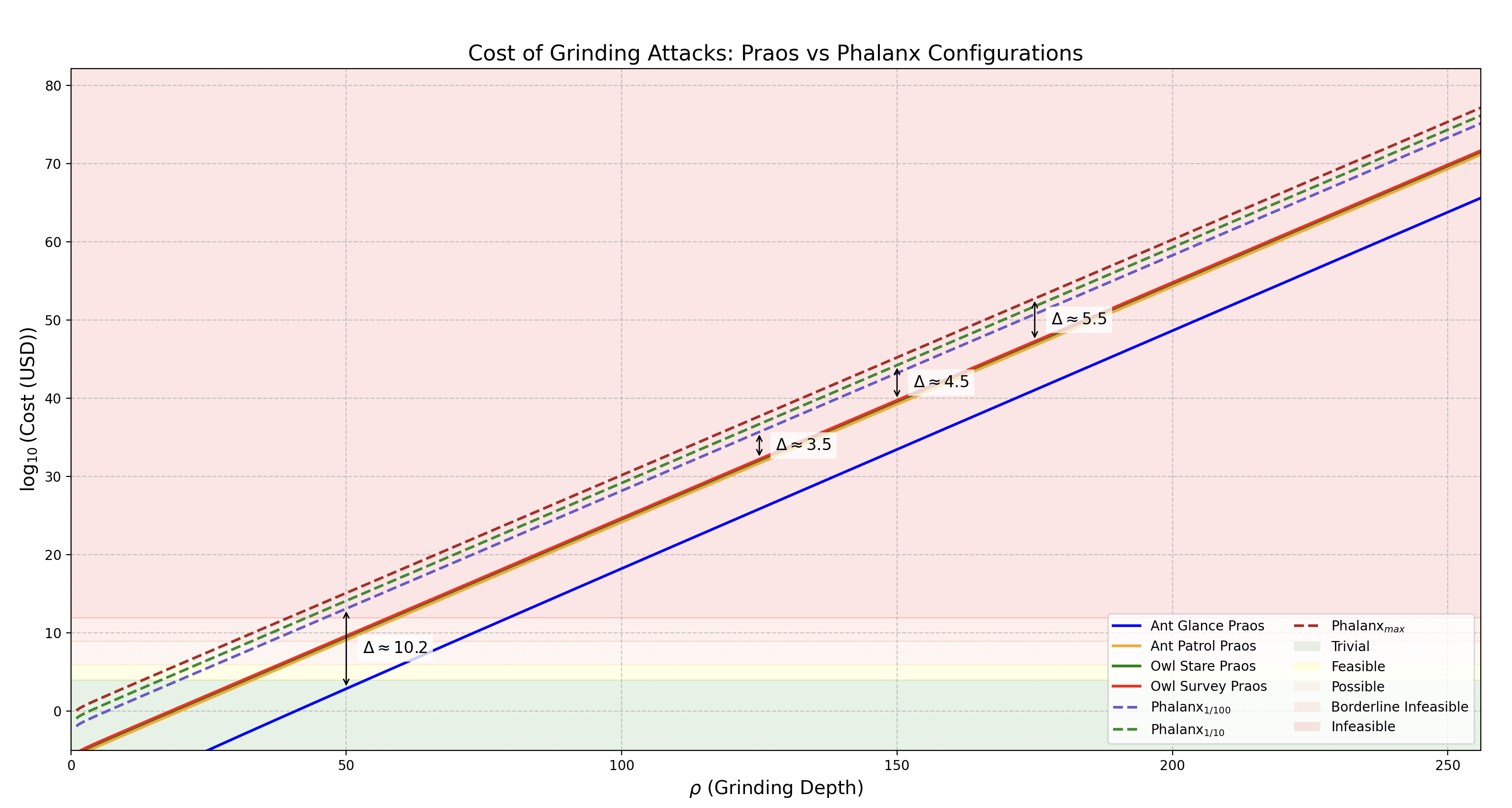

The graph below presents the logarithmic cost (in USD) of executing grinding attacks as a function of the grinding depth (), across both Praos and scenarios.

- Solid lines correspond to the original Praos configurations (Ant Glance, Ant Patrol, Owl Stare, and Owl Survey).

- Dashed lines represent the respective variants, each incorporating a fixed additional computational overhead of .

- The shaded feasibility regions reflect increasing economic difficulty levels, based on thresholds defined in CPD Section 3.6 – Grinding Power Computational Feasibility.

✏️ Note: The Python script used to generate this graph is available here ➡️ scenario_cost_praos_vs_phalanx.py

The graph highlights how the protocol dramatically increases the cost of grinding attacks compared to Praos, using a logarithmic scale to represent costs in USD as a function of the grinding depth :

-

Consistent Cost Increase Across All Values The differences (deltas) between and Praos scenarios remain stable across all grinding depths due to the logarithmic scale. This allows us to make generalizable observations regardless of .

-

Moderate Gap Between Scenarios Within Variations between different scenarios (e.g., Ant Glance vs Owl Survey) are relatively modest. For example:

- At , the cost difference between Owl Survey () and Owl Survey (Praos) is about 3.5 orders of magnitude in .

-

Significant Overhead Introduced by The computational burden imposed by Phalanx is substantial.

- At , the cost delta between Owl Survey () and Ant Glance (Praos) reaches nearly 9.8, representing a 10⁹.⁸× increase in expected cost for the attacker.

- This effectively pushes grinding attacks into the "infeasible" zone for a wide range of strategies.

-

Strategic Uniformity Under All scenario curves tightly cluster together, showing minimal divergence across evaluation complexity () and observation scope ().

- This implies that Phalanx equalizes grinding costs across adversarial strategies.

- Practically, this means defenders (e.g., protocol designers) can reason about attack feasibility without considering specific adversarial tactics. One cost curve is sufficient.

We can now simplify and generalize the grinding cost formulas for different Phalanx configurations, along with their estimated order-of-magnitude improvements over Praos:

| Configuration | Time Budget | Grinding Cost Formula | Cost Amplification |

|---|---|---|---|

| 2 hours | × | ||

| 12 hours | × | ||

| 5 days | × |

N.B. We can note that even with the use of ASICs, with a speed up of 3x to 10x, Phalanx would still add a significant term and reduce the cost amplification to still acceptable levels.

✏️ Note: The Python script used to generate this graph is available here ➡️ scenario_cost_praos_vs_phalanx-full-scenarios.py.

These results confirm that even the minimal configuration () yields a -fold increase in the computational cost of a grinding attack — a formidable barrier for adversaries. More aggressive deployments such as and push this cost further, to and times that of Praos, respectively — while still remaining practical for honest participants.

1.3.4 Impact of Tᵩ on Feasibility Categories

This simplification allows us to revisit and improve the feasibility category table presented in the Problem Overview section :

✏️ Note: The code to generate this graph is available at ➡️ this link.

The tables below present first the original Praos feasibility intervals, followed by the adjusted categories under Phalanx :

| Feasibility Category | 🔵 Ant Glance | 🟠 Ant Patrol | 🟢 Owl Stare | 🔴 Owl Survey | |||

|---|---|---|---|---|---|---|---|

| 🟢 🌱 Trivial for Any Adversary | |||||||

| 🟡 💰 Feasible with Standard Resources | |||||||

| 🟠 🏭 Large-Scale Infrastructure Required | |||||||

| 🔴 🚫 Borderline Infeasible | |||||||

| 🔴 🚫 Infeasible |

The Phalanx tables include delta improvements for each Praos scenario. A positive implies that Phalanx forces infeasibility earlier, i.e., at a lower value, thereby increasing adversarial cost :

| Scenario | |||

|---|---|---|---|

| 🔵 Ant Glance | |||

| 🟠 Ant Patrol | |||

| 🟢 Owl Stare | |||

| 🔴 Owl Survey |

1.4 Conclusion: How Much Risk is Mitigated?

To quantify the security improvement, we compute the percentage reduction in the “Trivial for Any Adversary” interval compared to Praos. This represents the portion of grinding attacks that are now pushed into more difficult feasibility regions.

| Scenario | Praos Trivial | % Reduction | % Reduction | % Reduction | |||

|---|---|---|---|---|---|---|---|

| 🔵 Ant Glance | 53.6 | 19.6 | −63.4% | 16.3 | −69.6% | 13.0 | −75.7% |

| 🟠 Ant Patrol | 32.9 | 19.6 | −40.4% | 16.3 | −50.5% | 13.0 | −60.5% |

| 🟢 Owl Stare | 31.6 | 19.6 | −38.0% | 16.3 | −48.4% | 13.0 | −58.9% |

| 🔴 Owl Survey | 31.1 | 19.6 | −37.0% | 16.3 | −47.6% | 13.0 | −58.2% |

These results show that Phalanx makes low-effort grinding substantially harder, reducing adversarial opportunity for trivial manipulation by up to 76% in the most favorable configuration, and by at least 37% across all attack types and parameterizations.

This concludes our high-level assessment of feasibility mitigation in security terms. In the next section, “2. How Phalanx Improves CPS-17 – Settlement Speed?”, we will examine how this risk reduction translates into a much more tangible and practical benefit: faster and more reliable settlement times in Ouroboros.

2. How Phalanx Improves CPS-17 - Settlement Speed?

Let us recall that, like Bitcoin, Cardano relies on probabilistic and unbiased randomness for leader election. As a result, both systems inherently provide statistical consensus guarantees. For Stake Pool Operators (SPOs), being elected as a slot leader grants some control over the protocol. This control increases with stake—more skin in the game means more chances to be selected. However, due to the randomized nature of the leader election, SPOs cannot predict or influence exactly when they will be selected.

This makes undesirable events—such as regional concentrations of slot leadership deviating from the expected distribution, or control over multiple consecutive blocks—statistically quantifiable and, in the absence of grinding attacks, extremely unlikely. These include risks like rollbacks, -common prefix violations, or private chain attacks. This is precisely the security model Ouroboros Praos was designed around—and so far, it has held up well.

However, if adversaries manage to control more than 20% of the stake, they gain significant and exponentially growing grinding power. This power allows them to bend the statistical distribution of events in their favor. For example, achieving a grinding depth of 79.4 means they can select from among (~ ) possible distributions to optimize the timing and nature of their attacks. At that scale, they can deliberately amplify the probability of "bad events" and execute a variety of targeted attacks against the protocol.

In this section, we narrow our focus to a specific class of such bad events: those that bias or delay the confirmation time of transactions on Cardano. We’ll show how this issue is directly tied to adversarial grinding power, and how reducing that power leads to faster and more reliable settlement guarantees, thereby directly addressing CPS-0017 / Settlement Speed.

2.1 Settlement times without grinding attacks

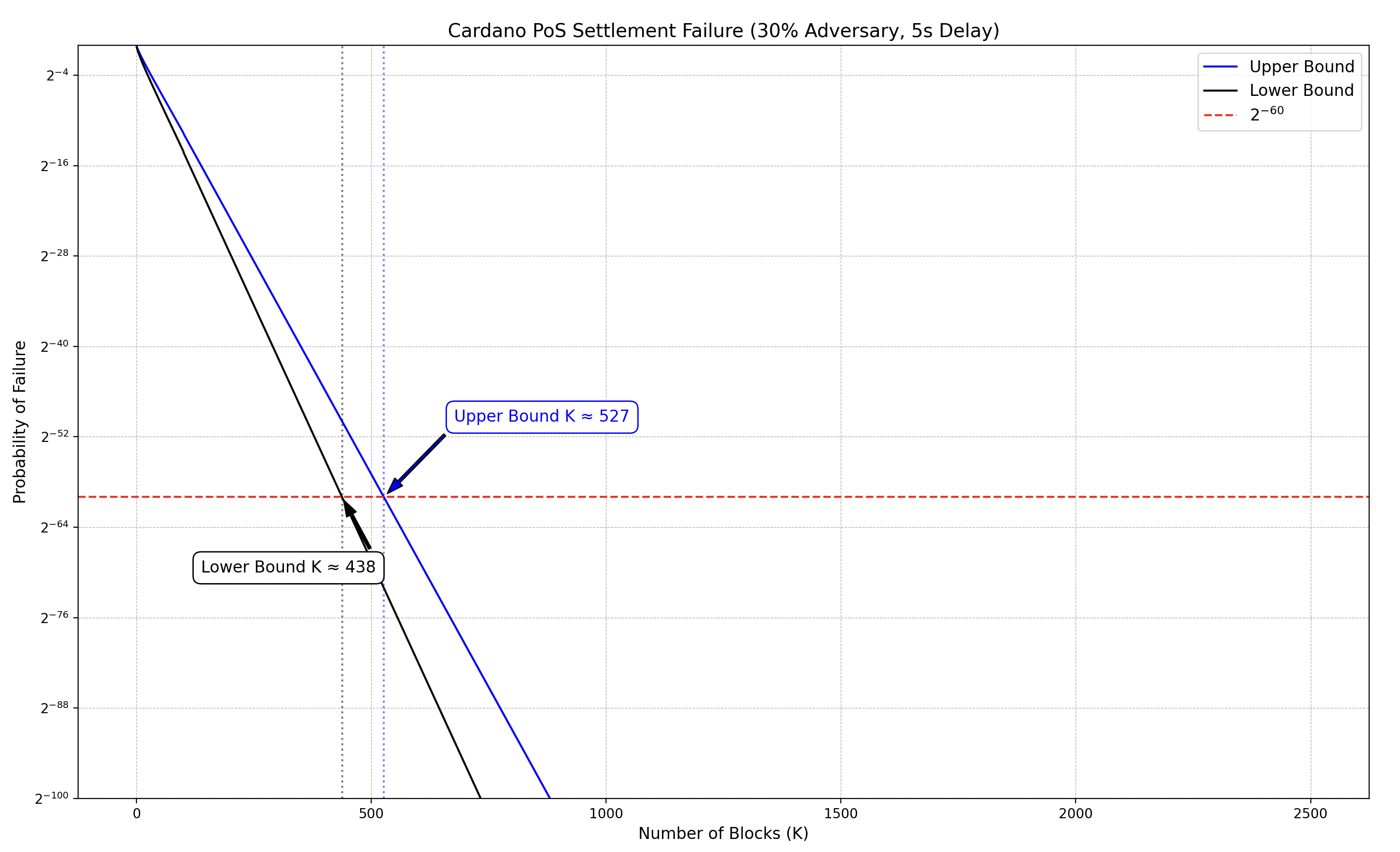

In longest-chain consensus protocols like Ouroboros Praos, settlement time refers to the duration required for a transaction to be considered irreversibly included in the blockchain with high probability. Without grinding attacks, this is primarily determined by the risk of chain reorganizations (e.g., forks or common prefix violations), where an adversary might create a competing chain that overtakes the honest one. The goal is to compute the minimum number of confirmations (blocks appended after the transaction's block) needed to achieve a target security level, such as a failure probability below or .

The methodology for computing settlement bounds, as detailed in the paper "Practical Settlement Bounds for Longest-Chain Consensus" (Gaži et al., 2022), uses a phase-based analytical model that divides time into intervals separated by the maximum network delay (e.g., 2-5 seconds for Cardano). It tracks metrics like "margin" (for PoW) or "reach" and "margin" (for PoS) to bound the probability of an adversary overtaking the honest chain.

To obtain metrics, you can run the software from https://github.com/renling/LCanalysis/, which implements these evaluations in MATLAB. Clone the repo, open PoSRandomWalk.m (for PoS like Cardano), set parameters (e.g., honest ratio (30% of stake adversary), network delay ), and run to output failure probabilities vs. confirmations. Below is a representative graph:

✏️ Note: The Python script used to generate this graph is available here ➡️ settlement-time-without-grinding.py.

A 60-bit confidence level (failure probability ≤ ) is a common threshold where events are considered negligible in practice. In the graph above, for example, this corresponds to a confirmation interval of [438, 527] blocks. Beyond this, the choice of confidence level and thus the number of confirmations required for transaction settlement, becomes application-specific, balancing security against efficiency.

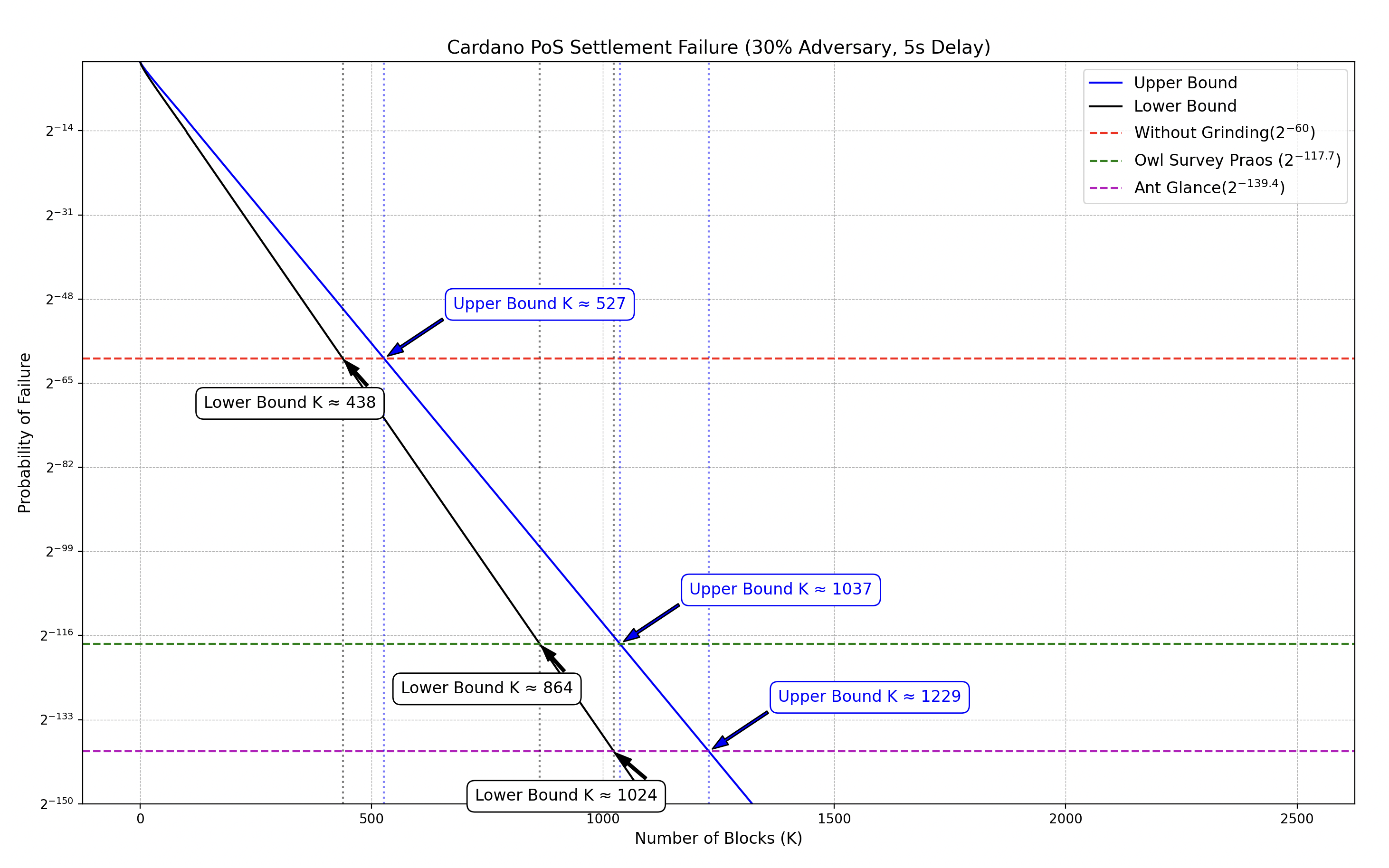

2.2 How Grinding Power Affects Settlement Times

What does it mean for settlement times when, in a scenario like the Owl Survey Glance, the adversary can observe a large portion of the candidate randomness distribution and perform an attack with a grinding power of ?

A grinding power of / a grinding depth of 57.7 bits, implies that:

- The adversary can simulate approximately distinct randomness outcomes, derive the associated leader schedules for the next epoch, and select the most favorable one.

- This drastically increases the likelihood of bad events (e.g., settlement failures) compared to a protocol with unbiased randomness.

- More precisely, if a bad event occurs with probability under honest randomness, then an adversary capable of evaluating different randomness candidates can amplify this probability up to (by the union bound).

In practical terms, such grinding power extends the number of confirmations required to reach a desired security level. The stronger the adversary’s grinding capability, the longer it takes for a transaction to be considered truly settled.

For example, assume that on mainnet, a rollback probability of is achieved for a block at index after subsequent blocks are appended. If an adversary possesses grinding power of , the effective risk increases to:

To preserve the original confidence level under adversarial grinding, the protocol must instead target a baseline security of:

This implies that many more blocks must be appended before a block is considered settled, thereby significantly increasing settlement times.

In the example above, we used the Owl Survey Glance scenario, which is the most computationally expensive in terms of grinding cost. However, when establishing a protocol-wide security threshold, it is more prudent to anchor on the worst-case grinding power — that is, the scenario with the highest grinding depth. In our analysis, this is the Ant Glance scenario, with a grinding depth of 79.4 bits. To maintain the original confidence level under such an adversary, the protocol must instead target:

This defines a stricter baseline and ensures security even against the most favorable conditions for the adversary. In our previous scenario (30% adversary and 5-second network delay), the effect can be visualized as follows:

✏️ Note: The Python script used to generate this graph is available here ➡️ settlement-time-praos.py.

Where the confirmation interval was [438, 527] blocks in the absence of a grinding attack, it increases to [864, 1037] blocks under grinding power in the Owl Survey scenario, and further to [1024, 1229] blocks under the same grinding power in the Ant Glance scenario.

Assuming a block is produced every 20 seconds, this extends the required confirmation window from approximately [2.43, 2.93] hours to [4.80, 5.76] hours in the Owl Survey case, and up to [5.69, 6.83] hours in the Ant Glance case — more than doubling the settlement time.

As discussed in Section 1: How Phalanx Addresses CPS-21 – Ouroboros Randomness Manipulation, this is a key challenge in Praos: the presence of multiple attack scenarios with varying grinding power makes it difficult to define a single, consistent security threshold for settlement — a complexity that Phalanx simplifies by unifying the treatment of adversarial power across scenarios.

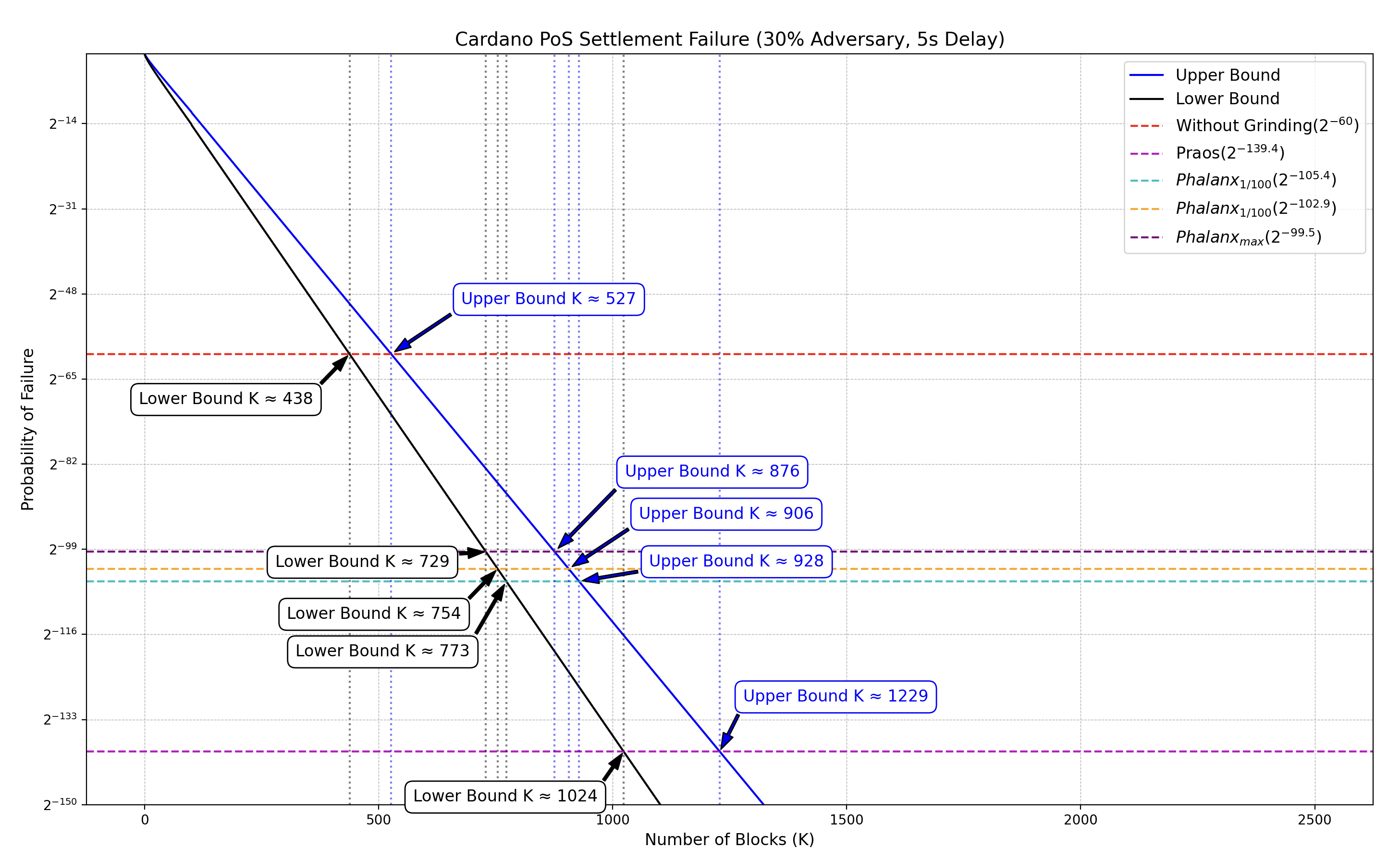

2.3 How Phalanx improves compared to Praos ?

In the conclusion of Section 1.4: How Much Risk Is Mitigated?, we quantified Phalanx's improvement over Praos in terms of grinding depth reduction as follows:

| Scenario | |||

|---|---|---|---|

| 🔵 Ant Glance |

In our previous examples, we are given that under Praos, the Ant Glance scenario results in a required security level of , which translate into the following threshold for the Phalanx configurations :

| Configuration | Computation | Resulting Security Level |

|---|---|---|

This can be visualized as follows:

✏️ Note: The Python script used to generate this graph is available here ➡️ settlement-time-phalanx.py.

In the absence of a grinding attack, the confirmation interval was [438, 527] blocks. Under the actual version of Praos, accounting for a grinding depth of 79.4 bits in the Ant Glance scenario, this interval increases to [1024, 1229] blocks.

However, with Phalanx applied, the required confirmation windows are significantly reduced:

| Configuration | Confirmation Interval | Duration @ 20s/block |

|---|---|---|

| [773, 928] | ~4.29 h → ~5.16 h | |